Price Scraping: How It Works, Challenges, and the Best Tools to Use

You set your prices in the morning, but by midday, your competitor has dropped theirs. By the time you notice, you've already lost sales. This is a daily reality in e-commerce, where prices on Amazon, Walmart, and Target fluctuate constantly. If you’re not tracking these changes, you’re losing potential revenue.

Manually monitoring thousands of product prices isn’t feasible. Price scraping automates real-time price collection, helping businesses stay competitive, adjust strategies instantly, and maximize profits.

In this guide, we’ll break down how price scraping works, the best tools available, and the challenges you might face.

What Is Price Scraping?

Price scraping is the automated process of collecting pricing data from websites using web scraping tools or APIs. Businesses use price scraping to track competitor prices, analyze market trends, and optimize pricing strategies.

Price scraping is essential in industries where price fluctuations impact purchasing decisions, such as e-commerce, travel, finance, and B2B sectors.

For example, if you’re shopping for electronics, you’ve likely noticed how prices change within hours. Online retailers use price scraping to adjust rates based on competitor pricing, demand, and other factors.

Regardless of your industry, tracking competitor pricing and responding quickly is essential for staying competitive.

How Does Price Scraping Work?

Price scraping follows a structured process that enables businesses to collect, analyze, and utilize pricing data. It automates competitor price tracking to help companies make informed decisions.

1. Identifying Target Websites

The first step is selecting the websites from which to extract pricing data. These could be competitor sites, marketplaces, price comparison platforms, or industry-specific sources.

For example, an e-commerce retailer may track prices on Amazon, Walmart, and Target to refine their pricing strategy. Local competitors should also be considered for physical stores or service-based businesses.

2. Identifying Your Competitive Assortment

Instead of relying on a single competitor, businesses should analyze a broad range of competitors. This ensures pricing strategies are based on industry-wide trends rather than isolated cases.

Some companies adjust prices due to excess inventory or internal policies. By tracking multiple competitors, businesses avoid reacting to outliers.

3. Determining the Frequency of Scraping

How often should pricing data be scraped? The answer depends on the industry.

- Airlines adjust ticket prices in real time based on demand.

- E-commerce businesses may require hourly updates.

- Retailers may only need daily or weekly price monitoring.

Choosing the right frequency ensures businesses collect relevant data without overloading systems.

4. Extracting Pricing Data

Once target websites and competitor lists are identified, web scraping tools or APIs extract key details, including:

- Product prices

- Discounts and promotions

- Stock availability

5. Data Storage And Processing

Extracted data is stored in databases or spreadsheets for further analysis. Businesses clean, filter, and categorize the information to generate actionable insights.

For instance, a retailer might segment pricing data by brand, location, or competitor to identify trends.

6. Analyzing Price Data And Implementing Strategy

Over time, businesses analyze pricing patterns and optimize their strategies. Benefits include:

- Adjusting prices dynamically based on market trends

- Optimizing profit margins while remaining competitive

- Identifying seasonal trends and price fluctuations

- Automating pricing updates in real time

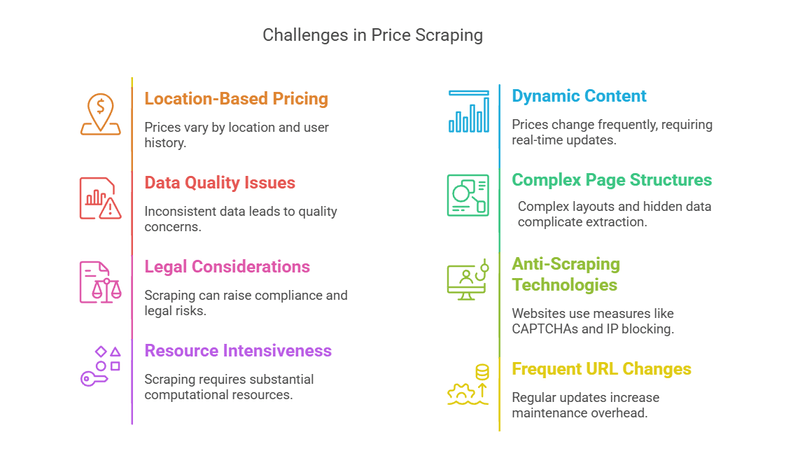

Primary Challenges of Price Scraping

Best Price Scraping Tools in 2025

Businesses and developers rely on various tools for price scraping. These tools range from cloud-based APIs that provide structured data to open-source frameworks that allow for custom extractions.

Users can choose between no-code solutions, lightweight parsing libraries, or full-scale automation frameworks depending on the scale, complexity, and technical expertise required.

1. Unwrangle

Best For: Businesses needing scalable, hassle-free price tracking.

Unwrangle provides real-time data extraction for e-commerce and business listing sites. Its APIs and no-code scrapers collect structured data, including product details, pricing, customer reviews, and search from platforms like Amazon, Costco, and Best Buy.

Key Features:

- Pre-built APIs for structured e-commerce data

- Supports Amazon, Target, Costco, and more

- No need for manual parser configuration

Pros:

- No proxy or CAPTCHA management required

- Unified schema for multiple platforms

- Bypasses anti-scraping protections

Cons:

- Does not support travel platforms

2. Scrapy

Best For: Developers handling complex, high-volume scraping projects

Scrapy is a Python framework optimized for large-scale web scraping. It supports asynchronous requests, middleware customization, and data exporting.

Key Features:

- Fast and scalable

- Multi-threaded scraping with built-in pipelines

- Exports data in JSON, XML, and CSV

Pros:

- Optimized for speed and efficiency

- Active community and extensive documentation

Cons:

- Requires advanced coding skills

- Not ideal for JavaScript-heavy sites

3. Beautiful Soup

Beautiful Soup is a lightweight Python library for parsing HTML and XML. It is ideal for small-scale scraping tasks with static web pages.

Key Features:

- Simple API for HTML/XML parsing

- Works well with Requests for fetching data

- Ideal for quick one-time scrapes

Best For: Beginners or small-scale projects requiring basic data extraction

Pros:

- Easy to learn and implement

- Flexible and lightweight

Cons:

- Not suitable for large-scale scraping

- Slower than Scrapy for bulk data extraction

4. Selenium

Selenium automates browser interactions, making it useful for scraping dynamic content that traditional scrapers cannot access.

Key Features:

- Automates browser-based scraping

- Handles JavaScript-rendered content

- Supports multiple programming languages

Best For: Extracting data from interactive, JavaScript-heavy websites

Pros:

- Works on sites where static scrapers fail

- Supports multiple browsers

Cons:

- Slower due to full browser automation

- Requires significant system resources

5. Playwright

Playwright is an advanced automation framework that provides better browser control than Selenium. It efficiently handles AJAX, dynamic content, and network requests.

Key Features:

- Supports multiple browser environments

- Handles AJAX, dynamic content, and network requests

- Provides auto-waiting for page elements

Best For: Developers scraping modern, JavaScript-driven sites

Pros:

- Faster than Selenium

- Built-in headless browsing for efficiency

Cons:

- Newer framework with a smaller community

- Requires advanced coding skills

Real-World Applications of Price Scraping

Price scraping helps businesses track competitor pricing, optimize procurement, and improve decision-making. Companies use real-time data to adjust pricing, negotiate better deals, and streamline inventory management. Below are practical industry applications.

Competitive Price Tracking and Dynamic Pricing

In price-sensitive industries, staying competitive means keeping a close eye on market fluctuations. Price scraping automates this process, helping suppliers and manufacturers adjust pricing across multiple distributors and sales channels.

For example, if a competitor lowers bulk pricing on high-demand components, businesses can react either by adjusting their prices. Companies using tools like Unwrangle e-commerce APIs can track price changes automatically to ensure they stay competitive without constantly monitoring prices manually.

For more info you can check our blog on Competitor Price Scraping.

Price Scraping for Market Research

Good pricing decisions come from understanding market trends. Businesses collect historical pricing data to spot demand patterns and adjust strategies ahead of time.

A company tracking raw material costs, for instance, can spot price increases before they happen and stock up early. Similarly, developers working on pricing models rely on this data to fine-tune their predictions, helping businesses avoid costly miscalculations. Instead of reacting after the fact, companies can plan based on clear pricing patterns.

Procurement Optimization

For procurement teams, knowing when and where to buy materials makes a huge difference in overall costs. Price scraping allows businesses to monitor suppliers like Build.com, Home Depot, and Lowe’s to secure the best deals.

For example, price estimation helps construction businesses assess market trends and allow them to buy raw materials at the right time and reduce expenses.

Inventory and Supply Chain Management

Price scraping isn’t just about finding the lowest price, it also helps businesses avoid stock shortages and manage inventory efficiently.

For example, a manufacturer sourcing industrial fasteners tracks supplier stock across multiple vendors. If one supplier starts running low while another still has ample supply at a stable price, the manufacturer can immediately adjust orders to prevent production slowdowns.

Is Price Scraping Legal?

Price scraping is generally legal when done responsibly. Key factors affecting legality include website terms of service, data privacy laws, and how extracted data is used.

Scraping publicly available pricing information is typically allowed, but businesses should avoid:

- Accessing data behind login pages or authentication walls.

- Extracting personal user data covered by privacy laws like GDPR or CCPA.

- Ignoring robots.txt files, which indicate scraping restrictions.

- Overloading servers with excessive requests.

Ethical & Legal Best Practices:

- Use adaptive techniques like rotating IPs and rate limiting to minimize detection risks.

- Ensure compliance with website policies and data protection regulations.

Final Thoughts

Price scraping is a way for businesses looking to stay ahead in competitive markets. By automating price tracking, companies can respond to market shifts in real time.

Selecting the right scraping tool depends on business needs, technical capabilities, and the complexity of the data required. Whether using cloud-based solutions like Unwrangle or developer-focused frameworks like Scrapy, price scraping allows businesses to remain agile in an ever-changing marketplace.